1、Introducing Hi Sun Fintech Global (HSG)

“Hi Sun Fintech Global Limited” (HSG) is one of the subsidiaries of “Hi Sun Technology (China) Limited” (The Hi Sun Group).

The Hi Sun Group is originated from the PRC since 2000, with domain focus on the design, development and deployment of financial service products and solutions for banks and financial institutes. Since then, the Hi Sun Group has grown to become one of the top Solution Integrators for top tier banks in the PRC, listed in the Hong Kong Stock Exchange in 2002 (stock code 0818).

HSG was established in Hong Kong March 2020, with a clear goal to business develop, promote and expand the Hi Sun Group’s financial service products and services to overseas market.

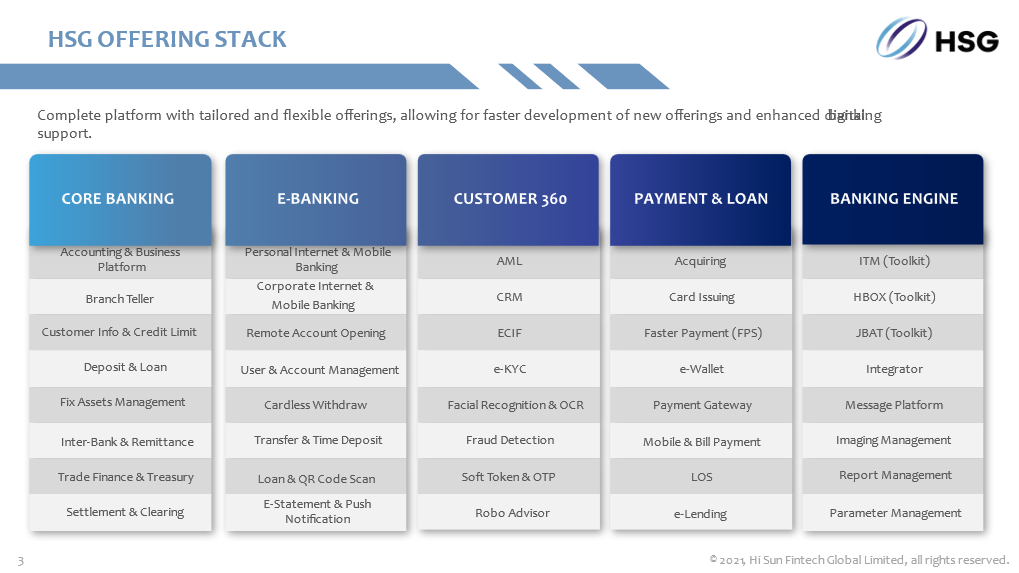

HSG provides a comprehensive product suite of financial service application modules for clients to choose from, as depicted in the diagram below.

Over the years, experiencing through evolving technology platforms, development methodologies, tools and skills, and varying implementation scenarios, HSG has accumulated a strengthened pool of subject matter experts across different technology eras. Today, HSG has consolidated these strengths and experiences to facilitate the provision of Downsizing services regarding the migration of legacy mainframe systems to cloud platforms and open applications.

2、Why Downsizing

The global financial industry today is undergoing tremendous pursuit of IT innovation to achieve respective competitiveness and modernization objectives. New technologies such as cloud computing, big data, blockchain, Internet of things, and artificial intelligence are trendy means for achieving such objectives.

Nevertheless, many legacy mainframe systems, given its unique nature of stability and processing power from the good old days, are still persistently operating in the financial industry, particularly banks. These legacy mainframe systems, once unique, are now facing increasingly severe challenges such as high cost, close loop, rigid architecture, and skills losses.

With the rise of the cloud-native distributed technology system, financial institutions are actively exploring the feasibility of Downsizing solutions to migrate from legacy mainframe systems to cloud or open platforms, to move away from monopoly situations once and forever, hence to achieve independent control, reduce security risks and reduce cost pressure.

3、Strengths of the HSG Downsizing Solution

Based on earlier years of practical experience in developing and implementing banking solutions under the mainframe environment, integrating with the innovative results of the research on cloud-native distributed architecture in recent years, HSG is able to provide independent, innovative, intelligent and economical Downsizing solution and services to clients, helping clients to move from a closed mainframe environment to open cloud computing platforms.

The HSG Downsizing solution fully inherits the valuable application assets of the client by reducing the intrusion of application logic, yet increasing the openness, flexibility and scalability of the application, thereby greatly improving application expansion and integration capabilities, in turn upgrading demand response performance and business innovation agility.

The downward migration of infrastructure, application system, and business data helps clients to gradually evolve applications from a centralized host architecture to an open distributed architecture, enabling a multi-platform and multi-technology integration solution. It also provides the opportunity to achieve innovated business needs, upgrade IT architecture, manage data asset quality, and improve overall business support capabilities.

At the same time, through open platforms, open-source technologies, and simplified application development, it helps clients to improve team development efficiencies, system expansion and disaster tolerance capabilities, hence enabling independent and controllable IT strategies with reduced cost of system software and hardware and human resources.

Some of the significant values of the HSG Downsizing solution are summarized as follows:

- Cloud-native architecture: The host application system is directly migrated to the cloud, and a cloud-native platform is built to support it. Through containerized deployment, the application has the concurrent computing and elastic deployment capabilities of the cloud.

- Downgrade of mainframe facilities: Mainframe application infrastructure is migrated to open platforms (such as Linux, Cloud, etc.) to reduce the dependence on mainframe resources and alleviate the shortage of mainframe talents.

- Application system migration: The host application system code is reconstructed into an open technology stack (such as Java, etc.) yet the application design assets are fully inherited.

- Business data migration: The host application business data is migrated to open source and cloud-native databases (such as Mysql, GuassDB, etc.) to manage data quality and expand the value of business data.

- DevOps establishment: The DevOps system is established as a supporting tool, the application can carry out continuous integration and continuous release capabilities, hence greatly improves development efficiency and quicker response to business needs.

- IT architecture transformation: plan the entire bank’s IT architecture, break the application system silos through OpenAPI, accumulate public service capabilities (technical capabilities, business capabilities, data capabilities), and even build enterprise-level or industry-level SaaS service capabilities.

4、HSG Downsizing Methodologies

4.1 The Cloud-Native Distributed Architecture

The Cloud-native distributed architecture provides a rapid migration of host system infrastructure, middleware, databases, applications and data, helping the client to transform to a cloud-native distributed architecture through self-developed technology bases such as distributed operation platform and microservice governance platform and DevOps management platform, supporting continuous integration and continuous deployment.

The entire cloud-native distributed solution can vertically split the application into independently deployed microservices according to the principle of high cohesion and low coupling, so that it has elastic deployment and grayscale publishing capabilities. The data will be divided horizontally, so that it is capable of horizontal and online expansion.

4.2 Host Infrastructure Downshift

Mainframe host infrastructure downshift is the focus and core element of the entire application downshift, it helps clients to quickly move down from mainframes (Z series), mid-sized computers (AS400) and small computers (RS6000, HP, etc.) to open platforms (X86), or directly to the cloud (such as the Huawei Cloud).

Application system code will remain unchanged, the Cobol compiler in the Linux kernel-like operating system will be used to recompile the application system code, continuous integration and continuous deployment will be performed through the DevOps tool chain to quickly implement the infrastructure downshift of the application system.

The business process, business logic, and service interfaces will remain unchanged, only API integration transformation and regression testing are required during the downshift, hence greatly reduce the participation of business personnel and avoid impact to business production, enabling a smooth switching between old and new systems.

4.3 Host Middleware Migration

The downshift of middleware is the technical foundation and dependency of technical architecture refactoring, helping client to smoothly move down from the host middleware (CICS) to open technology base, or directly use the cloud-native PaaS layer capabilities (such as: Huawei Cloud Roma platform).

Based on the open and open source SpringBoot+SpringCloud technology system, combined with mature open-source components to build a cloud-native distributed technology base (middleware), encapsulate the operating system and database and other infrastructure, transparent to applications, and reduce the impact on the underlying technology of application developers. requirements to improve application development quality and delivery efficiency.

4.4 Host Application System Migration

Based on the evaluation of application business value and application technical specifications, it is possible to consider reconstructing the technology stack of the entire application system from procedural languages such as Cobol, RPG, and C to a Java technology stack that is actively in use by the IT industry.

The entire application migration solution can be executed by means of automatic migration tools, supplemented by manual optimization. HSG self-developed Cobol to Java / RPG to Java migration tools can migrate host applications automatically, quickly and standardly, reducing the implementation risk of uncontrollable project cycle of manual code migration and the quality risk of insufficient test coverage. At the same time, manual optimization is used to assist in functional reconstruction and performance optimization of some key codes to improve the overall flexibility and high availability of the system.

4.5 Host Application Data Migration

Data model will be kept unchanged and the database will be downshift. The host DB2 database can be migrated to an open-source database (such as Mysql) or cloud-native database (such as GuassDB). During the migration process, the self-developed migration tool automatically converts the host EBCDIC encoded data to the open platform UTF-8 encoded data.

After the migration is completed, the migrated data will be compared through a data comparison tool to ensure semantic consistency and content integrity before and after data migration, and then combined with manual sampling tests to verify the accuracy and reliability of the migrated data.

With the development of the “Internet of Things”, the amount of data will grow explosively. Through the establishment of big data platforms, data lakes and other technologies to fully explore the value of data. In addition to supporting application business functions, big data platforms can also support data applications such as real-time marketing, risk management and executive brains etc.

5、Downsizing Tools

5.1 The Downsizing Implementation Process

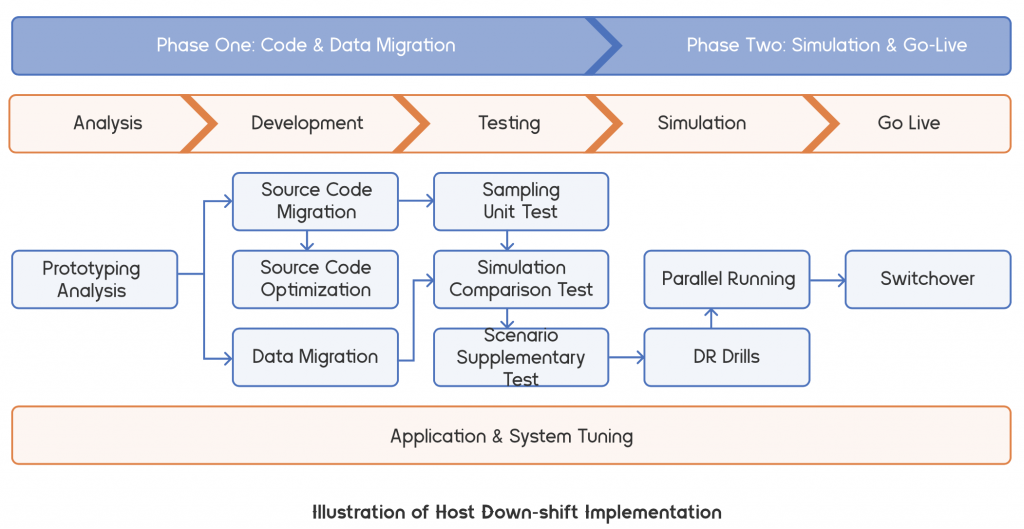

The key solution for host downshifting covers the entire software life cycle, and the key tasks are rapidly iterated through automated and standardized self-developed tools, and a smooth downsizing project delivery is achieved through the design of objective oriented and innovative implementation processes, including the derivation of code migration, continuous integration, data migration, data fragmentation, parallel simulation testing, and production switching solutions.

The introduction of parallel simulation tests and continuous integration of functional requirements of new and old systems in the entire downshifting process reduces the freezing period of business requirements for the implementation of downshifting projects, and achieves the purpose that the new system can automatically synchronize the business requirements of the production system.

5.2 Application Transformation Stage

System Analysis and Solution Formulation

Analyze the functional scope, business process, system architecture, and technical specifications etc. of the prototype system, evaluate the implementation strategy, implementation path, and implementation process of the entire application downshift, as well as the key solutions involved.

According to the target system architecture, confirm whether to introduce a distributed operation platform and a microservice governance platform, conduct capability assessment and difference analysis of the platforms, and finally formulate a project implementation scope and implementation plan that matches customer needs and resource allocation.

Code Conversion and Optimization

The migration of the host Cobol/PRG code is automatically completed by the code migration tool. The migration tool uses the source code of the prototype system as input to perform lexical, syntactic and semantic analysis, and generates a syntax tree. Through the migration of Cobol2Java/RPG2Java based on the original system development specification configuration, the rule base is converted into a Java syntax tree, and finally the final Java code is generated through the Java code template, and finally the compilation and construction of the migration code is completed.

At the same time, manual optimization is carried out for programs with irregular code and poor performance procedures, while these optimization rules will be processed as exceptions in the migration rule base, so that automatic code migration and manual optimization can cooperate with each other to avoid conflicts.

Automatic Testing and Supplementary Testing

In the testing phase, in addition to iteratively verifying the accuracy of the migration rules and the correctness of the migration code by performing sample unit tests on the migration code, intelligent simulation comparison tests are innovatively introduced. Simulation comparison test, mainly by importing production traffic to simulate real production transactions for simulation playback, and comparing the playback results of the new system with the actual results of the production system to verify the quality of the entire migration, hence reduce the workload and costs of manual testing, and assure that test cases will cover production business scenarios and data.

At the same time, manual supplementary tests will be conducted for production scenarios that cannot be covered and selective key business processes, including special tests for interest accrual and settlement, and special tests for year-end settlement. It will also supplement some non-functional tests, including system performance tests and high availability tests, to ensure stable operation of the system after it is put into production.

5.3 Simulation Testing Stage

Parallel Simulation Testing

Automatic version tracking and continuous integration of the old and new systems will be implemented at parallel simulation stage, to ensure the comparability of the two systems, that the simulation can run continuously and achieve the effect of parallelism between the old and new systems.

The host system collects the transaction request and response messages received by the application, replicates the request message through the network device or gateway and sends it to the emulator. response message. The comparison machine compares the response result and the assertion data between the actual response message of the production transaction and the new system response message received through the simulation machine.

Production Switching

After the parallel simulation test reaches 4x9s (99.99%) accuracy rate standard, it will be submitted to the business acceptance and regulatory department for acceptance. After the acceptance is passed, the new system can be put into production. Through a simple switching scheme that only performs network adjustment, the one-time switching is carried out when the business is closed on holidays or weekends. The entire closure time is short, the demand freezing period is short, and the switching risk is controllable.

At the same time, the overall emergency plan will also be considered to support rapid rollback. After a successful and smooth switchover, the new system can be put into operation externally, and the old system will be offline.

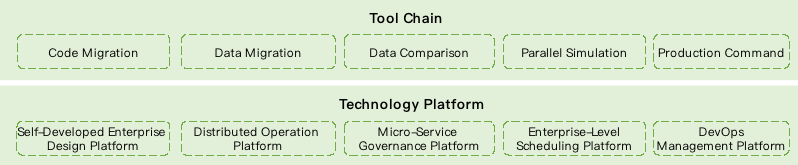

5.4 Tool Chain

The downshifting process involves complex matters such as the implementation of process construction and personnel skill reshaping. The self-developed tool chain can standardize the downshifting process, automate the downshifting execution, and reduce the use of personnel, especially to avoid large-scale business personnel and testers. In net, the tool chain not only improves the overall efficiency and quality of the downshifting project, but also mitigates the challenges on personnel organization, management and costs, ultimately reduces costs and increases efficiency for clients.

Code Migration Tool

Through the HSG self-developed code migration tool, the code conversion process and results can be standardized, and agile iterative delivery can be performed. The entire code conversion process eliminates the heavy manual refactoring of the code, and also avoids the ambiguity of human understanding, improving the efficiency and quality of code conversion.

The code migration tool is designed based on the compilation principle. It scans Cobol/RPG source files for lexical and grammatical analysis to generate a Cobol/RPG syntax tree, and then converts it into a Java syntax tree according to the Cobol2Java/RPG2Java mapping rules, and then combines the predefined Java code templates to generate the final Java object code.

Data Migration Tool

Through the HSG self-developed data migration tool, the full and incremental data migration methods are realized, and the data character set transcoding is completed synchronously during the migration process. The entire data migration process records logs throughout the entire process, supports breakpoint continuation, reduces data landing, ensures processing efficiency, and facilitates rapid positioning and accurate resolution of data migration problems. Incremental data migration will shorten the time window for data migration and production switching, and achieves smooth switching with “zero” downtime. The host completes the collection and transmission of database logs, and the data synchronization machine implements log parsing and transcoding, and generates SQL statements for playback to the distributed database to ensure the final consistency of database transaction execution results.

Data Comparison Tool

Through the HSG self-developed data comparison tool, it can realize the comparison of the return results and assertions of the online test response message, the comparison of the processing results of batch test data execution, and the comparison of the downloaded files generated by online batches and end-of-day batches. During the entire comparison process, the tool performs automatic comparison according to the time of the transaction, which improves the coverage and accuracy of data comparison.

Parallel Simulation Tools

Through the HSG self-developed parallel simulation tool, the complete and accurate playback test of the actual business message produced in the new system can be realized. The entire parallel simulation process is a quick and iterative verification. Through the asynchronous simulation strategy, the coverage and accuracy of the playback of the production message can be test to ascertain that the old new systems achieve dual-system parallelism and hence facilitates acceptance.

Production Command Tool

Through the HSG self-developed production command tool, the visual display of the production process is realized, and the production scheduling is automatically executed, and production participants only need to follow the instructions. The entire production process is carried out for multiple rounds of drills, the standard production switching process and the fine division of labor will be carried out, and the production process is monitored and managed in real time.

5.5 Technology Platform

The downshifting work promotes innovative designs such as system architecture reform and technical base reconstruction, and supports the stable operation of the system through the self-developed enterprise design platform, distributed operation platform, micro-service governance platform, enterprise-level scheduling platform, and DevOps management platform. Efficient governance of services, ultimately realizing cloud-native parallel computing and elastic deployment.